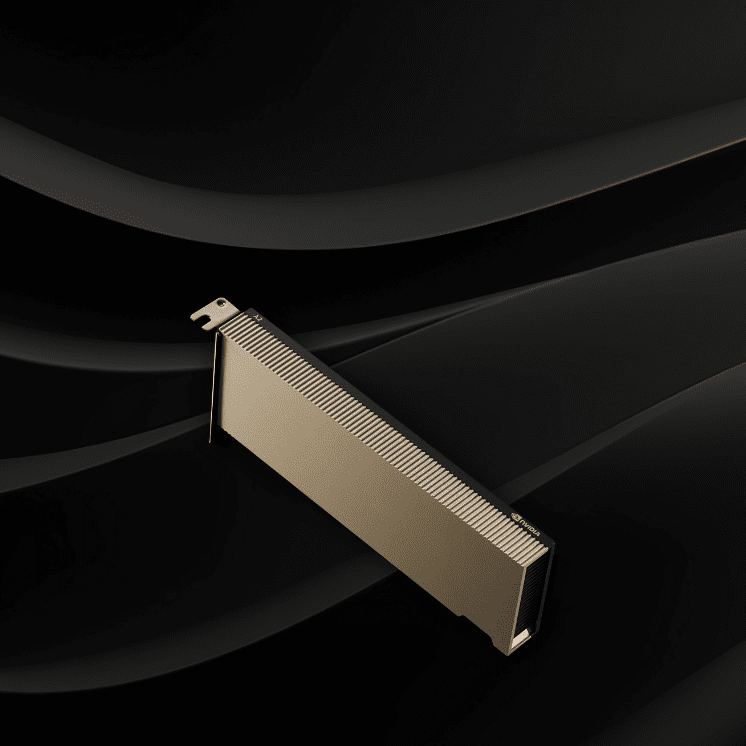

A2 GPU

Lightweight AI Inference·Edge Computing GPU

Entry-level inference for intelligent edge

Entry-level inference for intelligent edge

| Peak FP32 | 4.5 TF |

| TF32 Tensor Core | 9 TF | 18 TF¹ |

| BFLOAT16 Tensor Core | 18 TF | 36 TF¹ |

| Peak FP16 Tensor Core | 18 TF | 36 TF¹ |

| Peak INT8 Tensor Core | 36 TOPS | 72 TOPS¹ |

| Peak INT4 Tensor Core | 72 TOPS | 144 TOPS¹ |

| RT Cores | 10 |

| Media engines | 1 video encoder 2 video decoders (includes AV1 decode) |

| GPU memory | 16GB GDDR6 |

| GPU memory bandwidth | 200GB/s |

| Interconnect | PCIe Gen4 x8 |

| Form factor | 1-slot, low-profile PCIe |

| Max thermal design power (TDP) | 40–60W (configurable) |

| Virtual GPU (vGPU) software support² | NVIDIA Virtual PC (vPC), NVIDIA Virtual Applications (vApps), NVIDIA RTX Virtual Workstation (vWS), NVIDIA AI Enterprise, NVIDIA Virtual Compute Server (vCS) |